Some of you have asked why you haven’t heard from me in a while. The truth is I had a brain injury in July that left me largely incapable of functioning normally, let alone with the bandwidth to write something of any intellectual depth. While I have thankfully made a full(ish) recovery, I’ve also since started a second Master’s at the University of Cambridge, where I’m researching AI Ethics. Every week, I’ve been distilling what I’m reading and learning into pages for my notebook. After some prodding from , I’ve decided to start publishing these notes in the hopes that it may provide some fodder for thought, or at the very least, debate.

So, From the Notebook is a weekly(ish) reflection based on everything I’m learning and reading about AI at the moment. So without further ado.

Before I started taking an interest in AI, I instinctively thought of it as something moving linearly towards human-like intelligence, a teleological project of sorts with AGI as its endpoint. (Think ‘The Computer’ from Dexter’s Lab)

Now, the more I learn about it, the more I’ve started to decouple AI from that narrative and reframe it instead as a socio-technical mirror: AI as a set of systems revealing what societies value, fear, and prioritise when they try to automate intelligence itself. The way the internet, marketing, and large corporations talk about it often suggests that AI is an end-all-be-all form of intelligence. But it’s so much more complex than that.

AI is a floating signifier, in other words, it can mean many different things to many different people in different contexts: It can refer to a single discipline, to machine learning, to a cluster of disciplines, to an economic ideal, to a manufacturer’s philosophy, or even to a claim about the limits of consciousness.

Much of the confusion around what AI actually is stems from its nonlinear development. Three primary intellectual tributaries fed into its evolution: modern technology, cybernetics, and computer programming, each lending AI its own distinctive flavour. This ambiguity makes it difficult to define what AI is, assess its impact, and determine how to govern or regulate it.

Conceptualising AI through three key lenses can help us understand and contextualise it.

First, there’s AI as an instrument, viewed in a purely functional or utilitarian sense.

Second, AI as infrastructure, reframed from General Purpose Technology (like electricity) to a Large Technical System (like the internet) i.e. something that relies on and exists within other infrastructure.

Finally, there’s the ideological lens, which views AI as both a product of our existing ideologies, and as an ideology in itself.

The ideological lens, in particular, in my opinion at least, is underdiscussed in mainstream discourse.

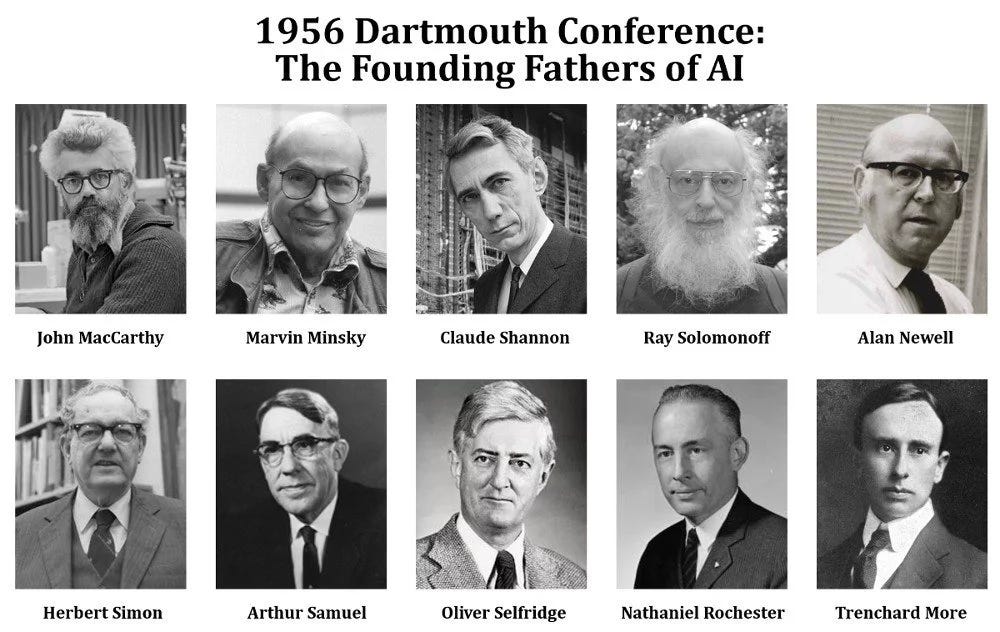

While AI was developed for military purposes, it was also born from ideological motivations of its founders. From its origins at the Dartmouth Conference, AI was conceptualised by a group of affluent white men as the embodiment and replication of human intelligence. This also suggests that the same ideological assumptions that shaped early AI, like rationality as the measure of intelligence, efficiency as virtue etc., still underpin today’s systems. Even when the rhetoric changes, the epistemic hierarchy remains.

Viewing AI through this lens also broadens how we evaluate its impact. We can trace parallels between the racial wealth gap and digital redlining (algorithmic bias), for example, both products of underlying ideology. AI utopianism itself is a powerful ideology, one that continues to drive much of Silicon Valley and the creators shaping these systems.

That isn’t to say that the ideological lens is the only of of value. In my opinion, none of these three lenses, instrumental, infrastructural, and ideological, are mutually exclusive but rather function as a set of interlocking layers or levels of abstraction.

When it comes to governance, AI must first and foremost be understood as a socio-technical system rather than merely a piece of technology. All three lenses become essential. We must ask: How does AI work? What are the mechanisms that make it function? How does it fit within our existing systems? What socio-technical environment is it a cog within? And crucially: which of our ideologies and biases has it inherited? What is it perpetuating? What agendas does it serve?

If policymakers treat AI purely as infrastructure, they’ll focus on reliability and interoperability; if as an instrument, they’ll focus on performance and safety; if as ideology, they must confront power and values. Seeing all three together highlights why AI governance cannot be purely technical. Ultimately, studying AI through these lenses forces a more uncomfortable question: what kind of intelligence, and by extension, what kind of humanity, are we choosing to reproduce?

TL:DR; AI can mean a lot of things, and blanket generalisations help no one. It must be understood as a socio-technical system within the broader context of our world.

What do you think? Which ideologies are you most afraid AI will entrench and amplify?

So happy you did this! Looking forward to getting a second-hand Cambridge education in AI, one newsletter at a time 🤓

Like ethics, AI is a floating signifier.

A floating signifier, isn't that such a lovely expression.

I hope everyday you feel a little better Eman 🙂